Overview

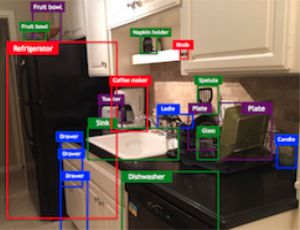

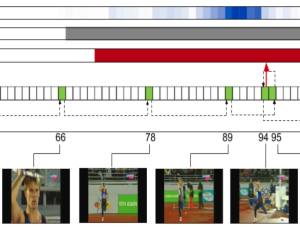

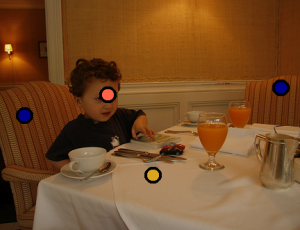

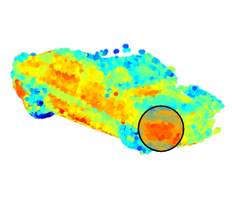

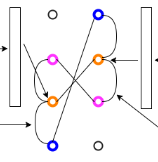

Computer vision researchers at Princeton focus on developing artificially intelligent systems that are able to reason about the visual world. We are interested in both inferring the semantics of the world and extracting 3D structure. We believe that it is critical to consider the role of a machine as an active explorer in a 3D world, such as a robot, and learn from rich 3D data close to the natural input to human visual system. We develop a variety of machine learning techniques, such as end-to-end deep learning and reinforcement learning. We collaborate closely with folks in graphics, robotics, theory, natural language processing, human-computer interaction, neuroscience, and other research areas.Faculty & Research Labs

Learn More

Join us for the PIXL lunch speaker series.To get involved in research, please contact the individual PIs.