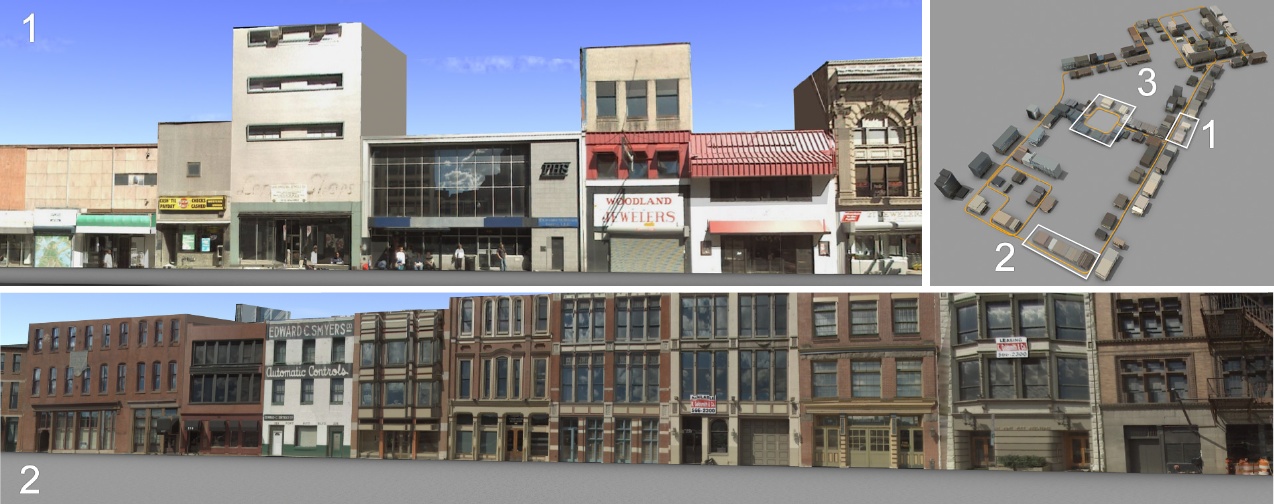

We propose an automatic approach to generate street-side 3D photo-realistic models from images captured along the streets at ground level. We first develop a multi-view semantic segmentation method that recognizes and segments each image at pixel level into semantically meaningful areas, each labeled with a specific object class, such as building, sky, ground, vegetation and car. A partition scheme is then introduced to separate buildings into independent blocks using the major line structures of the scene. Finally, for each block, we propose an inverse patch-based orthographic composition and structure analysis method for facade modeling that efficently regularizes the noisy and missing reconstructed 3D data. Our system has the distinct advantage of producing visually compelling results by imposing strong priors of building regularity. We demonstrate the fully automatic system on a typical city example to validate our methodology.

The work is supported by Hong Kong RGC Grants 618908 and 619107. We thank Google for the data and a Research Gift, and Honghui Zhang for helps in segmentation.